To follow up on part 1 of this blog post series, we are going to look at a different attack path for consent phishing; where instead of creating a new application, we will be impersonating legitimate Microsoft Applications, such as Microsoft Office. This attack is targeting legitimate and necessary Microsoft functionality that authenticates a device to Microsoft Office. With strong time constraints on social engineering and the resulting limited access, this may prove a challenging attack to perform. However, with a successful attack you can perform any action that Microsoft Office can! Furthermore, because this attack follows the legitimate authentication flow, security teams investigating the source of compromise may have a very difficult time finding out what happened.

Device Code Consent Phishing

Microsoft allows users to sign into applications installed on their computer such as Word and Excel. This is common functionality that has been around for years. This introduces some challenges, for example, the fact that Microsoft needs to treat the devices that are performing the authentication as untrusted. The process of device onboarding follows a Public client, code based, OAuth2 flow, where a unique code is provided to a user to allow them to sign in on the new device at the public endpoint – https://microsoft.com/devicelogin. While this functionality is necessary and is according to OAuth2 Specification, it is possible for an attacker to abuse this same OAuth code flow to request valid access tokens while impersonating Microsoft applications. The reason for this is because the device onboarding is classified as a Public application, because the authentication attempt is coming from a device that is under the control of an untrusted party (i.e. Not Microsoft).

Public Clients vs Confidential Clients in Azure

There are two classifications that applications can have in the OAuth2 Specification: public and confidential. A confidential application is one that can hold its credentials securely without them being exposed to the user. This would encompass an application which does not run on a user-controlled device, like a web application. The other classification – public – refers to an application that cannot securely store credentials, such as mobile and desktop applications, because they run on the user’s device. If an application or application client runs on a user controlled system, it should be treated as untrusted and should not store credentials. Therefore, common OAuth flows like we investigated in the previous article, where the device stored a secret that was used as part of authentication, are not possible in this scenario. One way to onboard an untrusted device is with user consent – which is a username/password combination and the accepting of a consent prompt. A short table of examples below is from the Microsoft documentation.

| Public | Confidential |

|---|---|

| Desktop Applications | Web Applications |

| Browserless APIs | Web APIs |

| Mobile Application | Service/Daemon |

Given that Office 365 is intended to run as a desktop and mobile application, it is necessary for Microsoft to implement the Public client authorisation flow for these platforms.

How can we abuse this?

If you want to explore the attack below yourself, I found Netskope’s phish_oauth repository to be very useful for gaining a practical understanding of what was going on. I also adapted their repository for the attack example presented in this article.

The idea behind the attack that we want to execute is that you can authenticate Public applications with user consent and that we want to do this with Microsoft applications. Well, Microsoft Office is by definition a public application, it runs on your device and should not be trusted to store credentials. Thus, it needs to use the same process as every other Public client application; which means if we can socially engineer a user into giving consent, we can get an access token for Microsoft Office as that user.

The beginning of any cyber attack is always the same, planning. We need to specify a service that we want to target and impersonate. In this case, I would like to get an access token for Microsoft Office. To get that, I will need to query the Microsoft Graph API, so we’ll assign these as variables in our PoC script.

$client_id_office = "d3590ed6-52b3-4102-aeff-aad2292ab01c"

$resource_graph = "https://graph.microsoft.com" The $client_id_office variable is an Application ID that can be pulled from the Microsoft documentation, alongside a list of other Microsoft Application IDs. Next, we need to add this variable to the body of an HTTP request to be sent to Microsoft to retrieve a device code:

### Invoke the REST call to get device and user codes

###

$body=@{

"client_id" = $client_id_office

"resource" = $resource_graph

}

$authResponse = Invoke-RestMethod -UseBasicParsing -Method POST -Uri "https://login.microsoftonline.com/common/oauth2/devicecode?api-version=1.0" -Body $body

### Retrieve the key fields in the response

###

$user_code = $authResponse.user_code # for user to input in the Microsoft auth screen. Verifies user.

$device_code = $authResponse.device_code # to be used later to retrieve the user oauth tokens after user auth

$interval = $authResponse.interval # the interval in secs to poll for the user oauth tokens

$expires = $authResponse.expires_in # the time (secs) that the user and device codes are valid for

$verification_uri = "https://microsoft.com/devicelogin" # verification URI (login page) for the user

The request being sent above is the start of the OAuth process to Microsoft as Microsoft Office. We’re effectively saying “Microsoft Office would like to authenticate a user, please create a unique code for us to use for this process.”

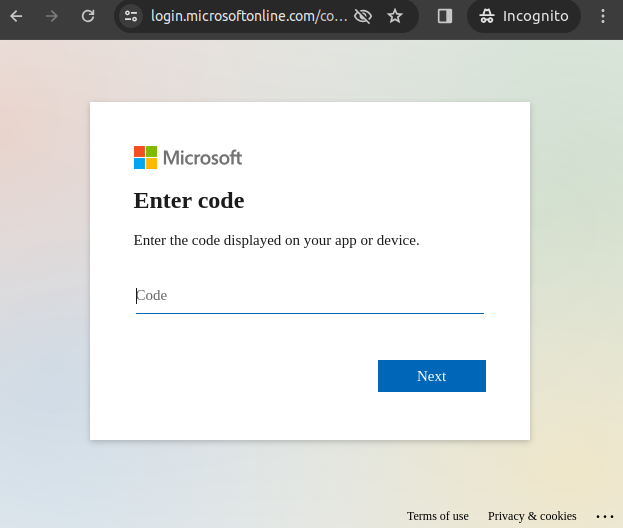

Once we have done that, Microsoft will return a unique Device Code, an 8 character alphanumeric code, to us. This code will need to be input by the user into the https://microsoft.com/devicelogin endpoint. Next we would need to persuade the user to go to the device login endpoint, which would look like this:

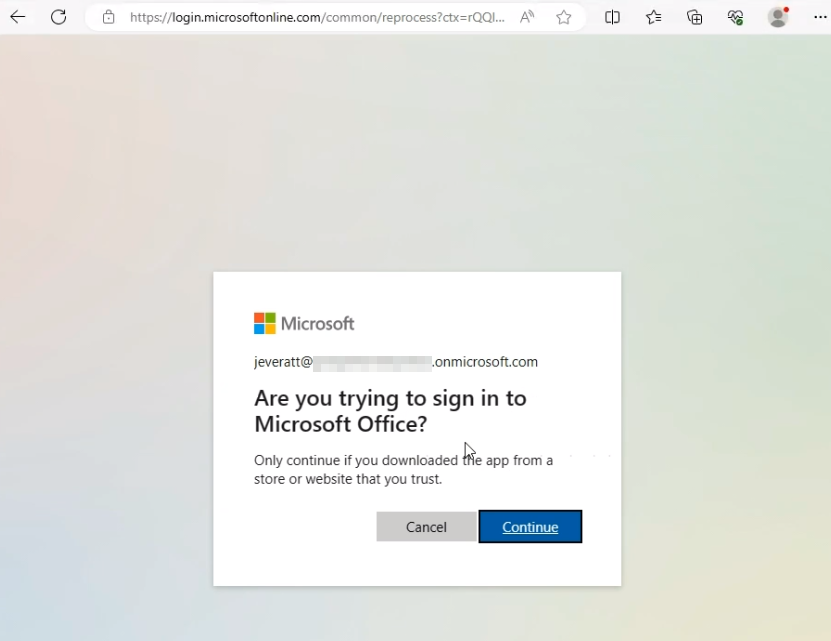

Once the user enters the code, which is only valid for 15 minutes (so socially engineer quickly!), they will be prompted to login with the normal Microsoft login page. Once they’re authenticated, Microsoft will ask them if they are trying to sign into Microsoft Office:

Once the user has clicked Continue, they would have signed into Microsoft Office. All that remains for us to do at this point is send an HTTP POST request to https://login.microsoftonline.com/Common/oauth2/token?api-version=1.0 with the following parameters:

client_id– same as original request, representing the application ID for which access is being requested.grant_type= urn:ietf:params:oauth:grant-type:device_code – Setting the Oauth flow we will be using, in this case is a Public flow called device code,code– the device code and the user code we received as a response to the first HTTP requestresource– same as original request

Once that has been completed, we will receive an access token with a number of scopes. Most importantly, the token will have the following scopes that would require explicit Global Administrator or Privileged Role Administrator approval in the consent approval prompt – for more information about this, please refer to the previous article in this series.

Calendars.ReadWrite

Contacts.ReadWriteDataLossPreventionPolicy.Evaluate

Directory.AccessAsUser.All

Directory.Read.All Files.Read.All

Files.ReadWrite.All

Group.Read.All Group.ReadWrite.All

InformationProtectionPolicy.Read

Mail.ReadWrite

Organization.Read.All

People.Read.All

Printer.Read.All

SensitiveInfoType.Detect

SensitiveInfoType.Read.All

SensitivityLabel.Evaluate

TeamMember.ReadWrite.All

TeamsTab.ReadWriteForChat

User.Read.All

User.ReadWrite

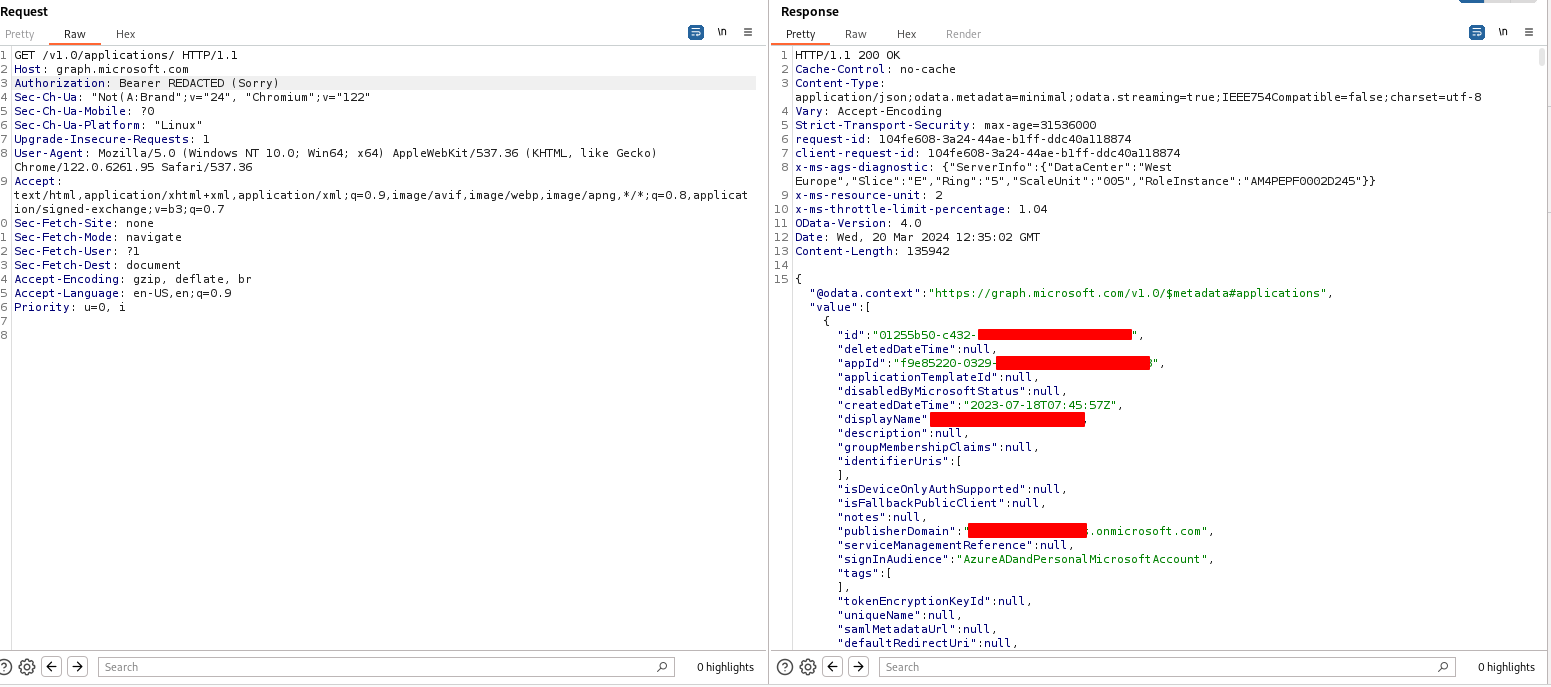

Users.ReadAnd now we would be able to perform actions against the user’s account as the Microsoft Office application. For example, we could make a request to https://graph.microsoft.com/v1.0/applications which is a request that lists all the applications in an Azure tenant and it requires the Directory.Read.All Scope, which would generally require administrator consent. The screenshot below shows the request successfully being made with a JWT requested under the guide of Microsoft Office.

As Microsoft Office, you are more limited in these scopes than one would usually be when normal consent is granted for the scopes. If you try and perform an action outside of what Office does, you will get the following error:

{

"error": {

"code": "UnknownError",

"message": "Microsoft Office does not have authorization to call this API.",

"innerError": {

"date": "2023-11-28T08:44:20",

"request-id": "d87fcadf-ed02-4bff-9c24-f1da71f28cd5",

"client-request-id": "d87fcadf-ed02-4bff-9c24-f1da71f28cd5"

}

}

}And the logs?

Well for logs, you get one thing to track down. A valid sign in to Microsoft Office from the user that was targeted. The login would appear legitimate and it would originate from the user’s location, IP Address, and their device. If a compromise were to happen in this way, it would become nigh on impossible to figure out the root cause from the logs alone. The reason for this is simply because we are abusing legitimate functionality – it’s how Office works.

The best shot that one would have for finding this compromise vector and narrowing down the root cause to device code consent phishing is by discussing with the user. If they had logged in their device recently, it should be possible to find some evidence of communication that is anomalous or originated from fake domains or unfamiliar numbers.

Conclusion

This is an interesting attack vector because it makes use of legitimate Microsoft Office applications as part of social engineering a victim, but is limited in that it has quite a strong time requirement in attack execution. Furthermore, there are a lot of restrictions in place that mitigate the impact of this attack, but at the end of the day you gain the right to perform actions as Office, and that can be a lot of power, given the scopes granted to Office. The most interesting part of this vector for me is that I can’t think of a better way to implement this authentication flow, since secrets would not work because anything client-side can’t be trusted, and any other solution would also have to work at scale. As such, as a M365 administrator, it is important to know that this attack vector exists and it is important for your users to know not to freely give away their consent and, specifically, to be educated on the implications of granting consent for Microsoft applications and under what circumstances this would be appropriate.

As a final note, this is the current state of this research at MWR; we are still actively pursuing research in this line and will be providing more details in future blog posts, so please keep an eye out for these. Given the uniqueness around this attack vector, we felt it was important to start to build awareness around this topic from our end. We also express our gratitude to others that have already done so in the public, like Jenko Hwong at Netskope and many others.