Introduction

When discussing Operations Security (OPSEC) in a cyber security context, focus is often placed on a malicious entity without looking into the OPSEC surrounding defenders. Many organisations make use of open-source tooling, paid for services, and/or proprietary software. Regardless of the method, the pertinent questions become: Does your organisation know where that data goes? Do your teams understand the risk associated with uploading data to public resources? Is your organisation at risk?

This blogpost will take you through an example of an investigation process, common tooling used by blue teams, and potential pitfalls associated with trusting publicly available software. It will also include several steps that your organisation can take to further secure its OPSEC processes to reduce the risk of exposing sensitive information.

What is OPSEC?

NIST defines Operations Security (OPSEC) as: “Systematic and proven process by which potential adversaries can be denied information about capabilities and intentions by identifying, controlling, and protecting generally unclassified evidence of the planning and execution of sensitive activities. The process involves five steps: identification of critical information, analysis of threats, analysis of vulnerabilities, assessment of risks, and application of appropriate countermeasures.”[1]

One of the core principals behind OPSEC is risk management which strives to prevent the inadvertent or unintended exposure of sensitive information. While this can be done in several ways, what happens when the processes involved are inadvertently putting your organisation at risk? Let’s walk through the steps of a basic investigation and where things can potentially go wrong.

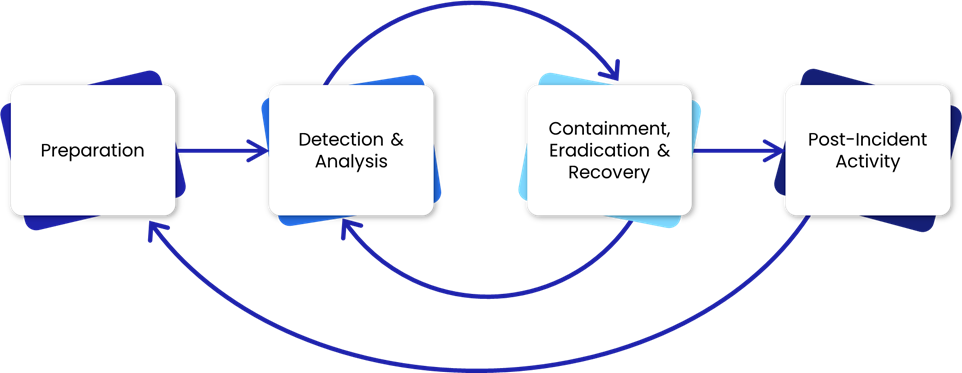

Typical Investigation Process

Figure 1 – Steps in a Typical Investigation Process

Once your blue team has received an alert (commonly referred to an Event), it is often accompanied by data to indicate why the event was raised as malicious. In the case of a malicious process executing, phishing, etc. the security team will begin the investigation process.

“Copying data, searching for text strings, finding timestamps on files, reading call logs on a phone. These are basic elements of a digital investigation,” said Barbara Guttman, leader of NIST’s digital forensics research program and an author of the study. “And they all rely on fundamental computer operations that are widely used and well understood.”

While this definition covers the basics, it is important to understand that for blue team operators, this often encompasses several steps within the investigative process, including Preparation and Detection & Analysis (Triage and Analysis) which can be broken down as follows:

- Collect Data: Collect data and other critical artifacts from the system using forensic tools that can connect to the system without modifying any timestamps.

- Collect Logs: Collect the appropriate logs, including Windows Events, Proxy, Netflow, Anti-Virus, Firewall, etc.

- External Intelligence: Gather external intelligence based on identified Indicators of Compromise (IOCs), including file hashes, IP addresses, and domains that were discovered.

Generally, the triage process ends when the acquisition of images or forensic artifacts is completed and delivered for analysis. Before an alert calls for a full-blown Incident Response (IR) investigation, the blue team is responsible for analysing the potential IOCs (which may rely on external intelligence). Due to the number of alerts that blue teams / Security Operations Centers (SOCs) face on a daily basis, this step generally includes automation in order to provide a scalable approach towards Triage and Analysis. The automation of these processes allows analysts to quickly identify and separate typical commodity alerts from high-risk, high-impact attacks and ensure that they can conduct thorough, cognitive-bias free analysis while striving to operate within their service-level agreements (SLAs).

This process may seem straightforward and there are an abundance of blogposts detailing the methods for first responders to deal with obtaining evidence, securing evidence, etc. but very few take a deeper look into External Intelligence (Step 3), which can often have unforeseen consequences.

Where can Sensitive Information Live?

Before we dive into pitfalls with external intelligence, it is important to understand that sensitive information can be found within a variety of sources. While not all organisations follow the same approach, it is common to see the following data types [2],[3]:

- Documents, emails, spreadsheets, pictures, videos, programs, applications, etc.

- Metadata associated with an object, such as file ownership, file permissions, EXIF data, etc.

- System files, logs, configuration files, etc.

The list above is not a comprehensive but it is clear that there are a variety of documents that can contain sensitive information about an individual, an environment, and an organisation. A more detailed breakdown of files containing sensitive information can be found within NIST’s white paper referenced above.

Common OPSEC failures

While the aforementioned processes are necessary, a large amount of time is spent on providing efficient response to ensure that the security team is working within their SLAs. This can often be at the expense of securing the data that has been obtained due to external stressors or incomplete/ineffective internal processes set forth by the organisation. To aid with the SLAs and the general workload, automation is often seen as the “saving grace”. Not only will it speed up processing time, but it also removes the human element, and thus human error. Since humans are often the weakest link within an organisation’s security, this improvement will prevent data from inadvertently ending up in the wrong hands, important details being overlooked or forgotten, and critical processes being bypassed. Or at least that’s how products promoting automation are often sold.

What happens when the processes that have been automated go wrong? What happens when the data that you are concerned about is sent to external tooling? The truth is, while automation is necessary to scale any team, this can also be a company’s downfall as these automated processes may not contain the logic that is required to determine what information is sensitive to your organisation. Effectively, if you have spent time automating processes without understanding the risks associated with them, you could be putting your organisation at risk.

“If you automate a poor process, you’re going to get poor results – just faster than you might have if you executed it manually. Optimisation – whether of IT or business processes – requires first understanding what you’re trying to optimise.”[4]

Alerting the Adversary

During an investigation, incident responders, blue teamers, SOC analysts, etc. are required to analyse data and events to determine whether they are the cause for concern. During this time, several techniques may be used (each with their own use cases), but sometimes these processes and tooling are used without enough insight into the potentially negative aspects.

If an investigation includes searching for answers in publicly available resources (e.g. VirusTotal, CyberChef, Twitter, etc.), it is very possible that the attacker is monitoring these sites as a method of determining whether their own payloads have been identified. When this happens, similar to engaging and interrupting their campaign, you may be tipping off the adversary. While this is not true for every case, an adversary may decide to prioritise covering their tracks (making it difficult to identify their initial access methods) or execute their goals with the limited time that they have left (destroying evidence, harming your environment, changing tactics and infrastructure, etc.). A good example of malicious users destroying evidence and changing tactics can be seen here after being tipped off via social media (such as Twitter).

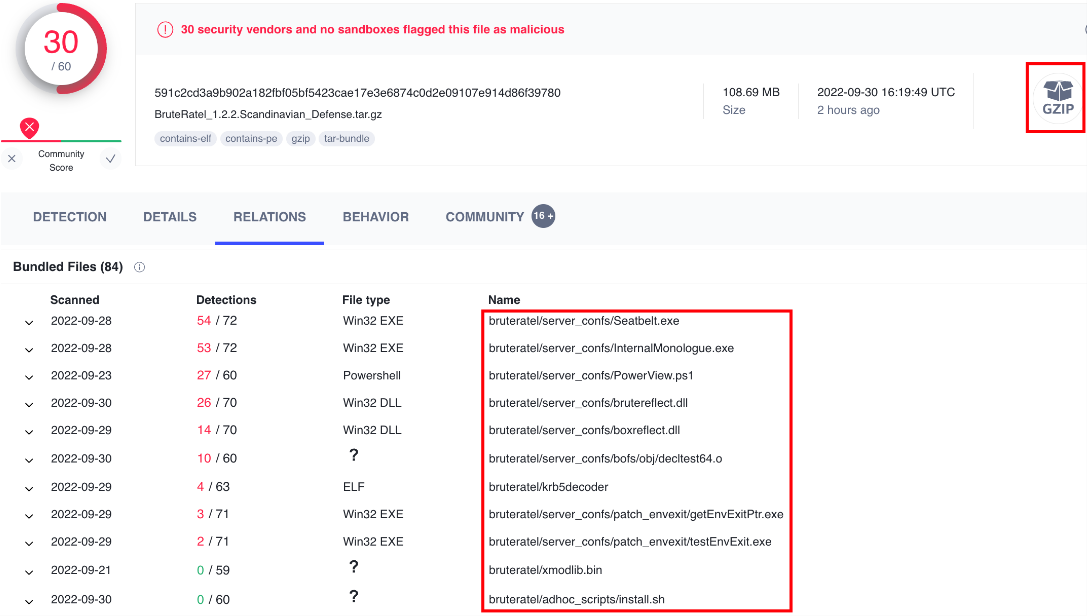

“Services such as VirusTotal allow users to upload samples of malware that are then tested against multiple AVs and the results are made available to the community. However, such services also provide simple queries which can be used to retrieve information from these services. An attacker could query for the hash of his malware and when a result is returned then someone must have uploaded the sample.”[5]

While tools like VirusTotal are fantastic, when potential malware samples are uploaded to the platform or other sandbox services, the files may be available to the public. Attackers can monitor these types of services for evidence of discovery which could hinder an IR investigation. Outflank covers this topic from an attacker’s perspective, including all of the items that they check for internally when performing adversary emulation assessments within their RedElk Talk. Based on this, it is not a stretch to assume that threat actors are constantly deploying similar tactics when targeting networks.

As this blogpost does not focus on this aspect, MB Secure has a series of blogposts which contain guidance on not only limiting the risk of alerting the adversary, but also methods to ensure that an adversary is not alerted during the investigation process [6],[7],[8]. Additionally, if you have not looked into security incidents and removing adversaries from your environment, Huntress has published a blogpost about that specific topic.

Leaking Corporate Data through Public Tooling

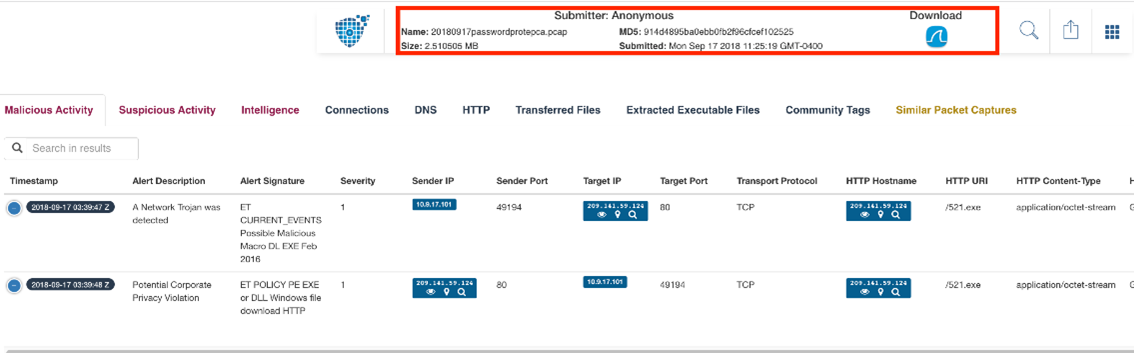

Now comes the interesting part which is often overlooked. In addition to tipping off adversaries, uploading this sensitive information to public tooling may inadvertently cause damage to your organisation by leaking sensitive/confidential information, disclosing information about the organisation’s internal tech stack, disclosing an active incident to the public, etc.

“When the analysts examine the files looking for clues of an attack, some of these files might contain confidential information. One of the common practices among the analysts is uploading malware samples to online services such as VirusTotal to check if it is malicious. However, the file uploaded to their servers remains available to the public, and therefore if one of these files contains sensitive information related to the company it would leak this content.” [5]

“When an incident happens, other than damage due to the cyber-attack, the company might suffer reputational damage. It is therefore important that the analysts do not accidentally inform the public that a certain company has been breached. Especially before the company has started with their recovery plan, which involves informing the public about the incident. Often the SOCs use codenames when referring to their customers so in the case the investigation becomes public for some reason, the identity of the customer is still protected.” [5]

As an example, two commonly used applications (VirusTotal and PacketTotal) were accessed without an account and it was possible to not only browse for information, but download potentially sensitive information for offline analysis, as can be seen in the screenshots below:

Figure 2 – VirusTotal Example Output

Figure 3 – PacketTotal Example Output

Figure 3 – PacketTotal Example Output

Positive Security did a fantastic job of diving into this exact topic for another online platform and found that samples uploaded to the URLScan platform leaked a large amount of information, including: Account Creation links, password reset links, API keys, Shared Google Drive Documents, WebEx meeting recording, invoices, and more.

“Sensitive URLs to shared documents, password reset pages, team invites, payment invoices and more are publicly listed and searchable on urlscan.io, a security tool used to analyse URLs. Part of the data has been leaked in an automated way by other security tools that accidentally made their scans public (as did GitHub earlier this year). Users of such misconfigured Security Orchestration, Automation and Response (SOAR) tools have a high risk of their accounts being hijacked via manually triggered password resets.” [9]

Even though VirusTotal [10],[11] and URLScan were mentioned above, there are several tooling options which could be used to gather information by organisations that are looking to protect themselves, for example: Spamhaus, PacketTotal, IBM X-Force, Mcafee Trustedsource, Abuse.ch, tria.ge, Symantex EDR, Any.Run, etc. These are all platforms that are used by blue team operators daily, but they might not restrict access to the uploaded data in the way that your organisation might expect.

In addition to sensitive information being leaked via documents, URLs often contain sensitive information that should not be shared with the public. Examples of potentially sensitive URLs provided by Positive Security include:

- Hosted invoice pages

- DocuSign or other document signing requests

- Google Drive / Dropbox links

- Email unsubscribe links

- Password reset or create links

- Web service, meeting and conference invite links

- URLs including PII (email addresses) or API keys

If any of those tools/APIs are performing public URL scans, this could lead to systematic data leakage. As those advanced security tools are mostly installed in large corporations and government organisations, leaked information could be particularly sensitive. The list above mostly contains commercial products, however, the Positive Security blogpost’s integration page highlighted 22 open-source projects which could also have the same risks associated with their usage. While most of these products have warned against uploading personal information, a large number of organisations have automated the uploading of URLs, email attachments, malicious documents, and other IOCs without properly considering the associated risks.

Uploading files to publicly available software may result in leaking confidential data which could result in a breach of confidentiality. Due to the sensitive nature of the information that blue teams handle on a daily basis, it is imperative that the organisation does not leak sensitive information via public data collectors. Additionally, the SOC has the responsibility to adhere to regulatory requirements (e.g. GDPR, POPI, etc.) and ensure that the organisation does not suffer reputational damage based on their own internal processes. While it is not possible to have a single response plan that addresses every potential attack, there are certain guidelines that can aid in dealing with a crisis and help to preserve important evidence for the subsequent investigation.

Additional areas of concern:

While not discussed directly in this post, this is also a common issue that we see revolving around the DevSecOps space. These teams often use public tooling with Cloud Sync such as:

- GitHub

- CloudSync wtih Postman

- StackOverflow

- etc.

These can all leak sensitive internal information, credentials, URLs, and more, to the public and open the organisation up to external threats. Attackers and automated tooling are constantly searching public code repositories like those listed above for secrets that development teams inadvertently leave behind.

Why does this happen?

Most organisations do not realise that the files that are uploaded to these platforms are also accessible to external entities. There are no restrictions about the location of the participating businesses, so there is no reason to assume that it is safe to upload confidential documents. While some of these files may be malicious, the majority are not malicious and should never be available for public viewing. If you’ve read this far, you may assume that it would never happen to your organisation, but there are several causes which can lead to these exact scenarios, including:

- Lack of awareness

- Underestimation of attacker capabilities

- Distraction

- Overconfidence

- Internal stressors

- Poor internal processes

What can be done?

You may have read this and realised that the use of external tooling is part of your current processes, so the question is how do you reduce the potential risk to your organisation? The following steps may aid you in tackling this issue:

- Review your current processes

- Determine any processes that have been automated by your organisation which rely on external resources

- Identify the public tooling that is included in these processes (manual and automated)

- Determine what information is stored by the tooling, verify whether it is secure and only accessible by your organisation

- Determine whether there is an offline / local version that can be run

- Modify your processes accordingly

Essentially, your organisation should perform a threat modelling exercise against your own OPSEC processes to determine the risk exposure and ensure that necessary precautions are taken to reduce the risk to an acceptable level. Additionally, your organisation needs to understand where submissions are originating from – this might be your employees or automated tooling such as SOAR platforms.

While this blog post attempted to shed light on certain areas that could be inadvertently placing your organisation at risk, it does not provide a method for deciding how to classify different intellectual property within an organisation. In order to assist with this, most organisation’s make use of a Traffic Light Protocol (TLP) which was originally created “to facilitate greater sharing of potentially sensitive information and more effective collaboration”. The Forum of Incident Response and Security Teams (FIRST) have released their new version – TLP 2.0, so it may be a good time to have a look at what should be shared within the public domain during day-to-day operations of internal security teams. If your organisation is currently using the TLP, TLP 2.0 has introduced a few changes which will assist in more fine-grained tuning.

Blue Team Training

In addition to reviewing internal processes, blue team training is an essential part of securing your organisation. To function effectively, the processes that blue teams/SOC analysts follow should be documented and training on the following topics should (at a minimum) be provided [12]:

- Training on effectively using SOC tooling

- Training on how to collect, organise, and use relevant threat data in a Threat Intelligence Platform (TIP)

- Principles of success for endpoint security data collection whether you use a SIEM, EDR, or XDR

- Alert Triage and how to quickly and accurately triage security incidents using data correlation and enrichment techniques

- How to best use incident management systems to effectively analyse, document, track, and extract critical metrics from security incidents

- Crafting automation workflows for common SOC activities which do not pose a risk to your organisation

Additionally, guidelines on analysing malicious events should be provided to the blue team. Example guidelines are provided below:

- What should not be uploaded to public resources? When information is placed on any site, the information, as well as the location of the request, is exposed. In general, any malware or information related to your organisation should not be uploaded to external sites. Scripts, executables, images, files, and encoded data can contain information related not only to the targeted host, but to the organisation. It can also tip off an attacker that their malware is being analysed by the security team.

- What should not be analysed on your workstation? Even though there are certain precautions taken by SOC analysts, you can never be too careful when analysing malware. There are hundreds of malware samples that are capable of escaping virtual machines (VMs). Analysts should never visit potentially malicious websites directly and malware should never be analysed directly on an analyst’s machine. Instead, they should use available resources such as a SOC investigation server or SOC sandboxes.

- What if internal tooling does not exist? What if you are investigating a host and you encounter a new IoC that cannot be deciphered with internal tooling?

- Communication: Communicating this to the team and your manager should (hopefully) ensure that new internal tooling is procured for your organisation.

- Permission: You need to understand the risk of uploading the data to an external source and what it could mean for your company, and you need to get sign-off from your manager.

Conclusion

This blogpost has covered a basic investigation process, common tooling used by blue teams, and potential pitfalls associated with automated uploads. It also provided information about how security tooling could be used by malicious parties to obtain sensitive information through data leaks which are putting your organisation at risk. If nothing else, I hope that it has convinced you to review the processes that your internal security teams follow and of the importance of understanding the risks associated with security tooling.

If you are interested in starting your journey into securing your internal processes or simply have a question, feel free to reach out!

References

- [1]: https://csrc.nist.gov/glossary/term/operations_security

- [2]: https://www.nist.gov/news-events/news/2022/05/nist-publishes-review-digital-forensic-methods

- [3]: https://nvlpubs.nist.gov/nistpubs/ir/2022/NIST.IR.8354-draft.pdf

- [4]: https://www.f5.com/company/blog/garbage-in-garbage-out-dont-automate-broken-processes#:~:text=If%20you%20automate%20a%20poor,you’re%20trying%20to%20optimize.

- [5]: http://essay.utwente.nl/84945/1/__ad.utwente.nl_Org_BA_Bibliotheek_…_MA_eemcs.pdf

- [6]: https://www.mbsecure.nl/blog/2018/9/opsec-for-blue-teams-part-1

- [7]: https://www.mbsecure.nl/blog/2018/9/opsec-for-blue-teams-part-2

- [8]: https://www.mbsecure.nl/blog/2018/9/opsec-for-blue-teams-part-3

- [9]: https://positive.security/blog/urlscan-data-leaks

- [10]: https://www.businesstimes.com.sg/technology/alphabets-virus-scanner-misuse-can-leak-data

- [11]: https://www.malwarebytes.com/blog/news/2022/04/why-you-shouldnt-automate-your-virustotal-uploads

- [12]: https://www.sans.org/cyber-security-courses/blue-team-fundamentals-security-operations-analysis/

Figure 3 – PacketTotal Example Output

Figure 3 – PacketTotal Example Output